I had the pleasure of presenting ‘Running ML Inference Services in Shared Hosting Environments’ at MLOps: Machine Learning in Production Bay Area Virtual Conference. This presentation was based off the 6 years of experience the Nextdoor CoreML team has productionalizing and operating 30+ real-time ML microservices.

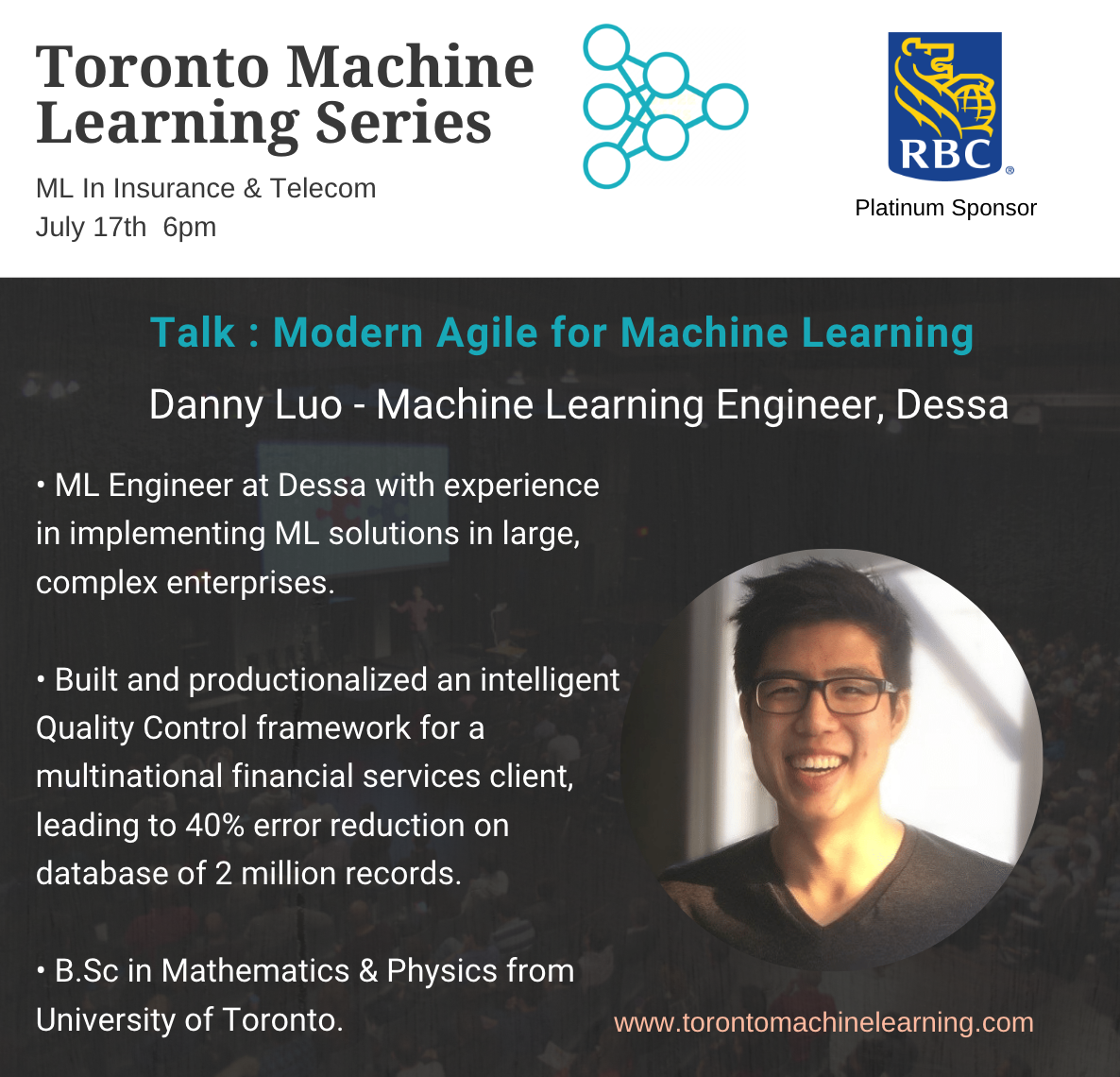

I had the pleasure of presenting ‘Modern Agile for Machine Learning’ at Toronto Machine Learning Micro-Summit Series to 100+ industry Machine Learning practitioners. The talk was based off my personal experiences in applying Extreme Programming practices for enterprise ML projects at Dessa.

I had the pleasure of presenting the state-of-the-art NLP model, Google BERT, at the Toronto Deep Learning Series (TDLS) meetup. This video has 83000+ views as of Jun 2024, and is the top viewed lecture of TDLS (now called AISC).

In 2014, I entered the University of Toronto as an undergrad with a burning passion for physics. In 2018, I left the academic world to start a career in industry machine learning.

This is how I transitioned from academia to industry.

What is UofT like? Is it hard? Is it as depressing as people say it is? And what is POSt?

As a recent Bachelor of Science graduate, I answer these and more in this guide for new students.

At ZeroGravityLabs, Taraneh Khazaei and I co-authored a fantastic blog post that details resolutions of common Spark performance issues. It was featured on the Roaring Elephant - Bite-Sized Big Data podcast.

I had the pleasure of presenting how to set up Spark with Jupyter on AWS at Toronto Apache Spark #19.

HackOn(Data), Toronto’s very own data hackathon in the heart of downtown, is back for 2017!

At HackOn(Data) last year, I learned a lot, had lots of fun, and made industry connections that landed me and my teammate great summer internships (my blog post). This year I plan on volunteering for HackOn(Data) 2017.

I highly recommend HackOn(Data). Register at hackondata.com/2017!