Multi-CPU Data Processing

20 Apr 2017

When the University of Toronto Data Science Team participated in Data Science Bowl 2017, we had to preprocess a large dataset (~150GB, compressed) of lung CT images. I was tasked with the following:

- Read data from S3 bucket

- Pre-process the lung CT images, following this tutorial

- Write the pre-processed image array back to S3

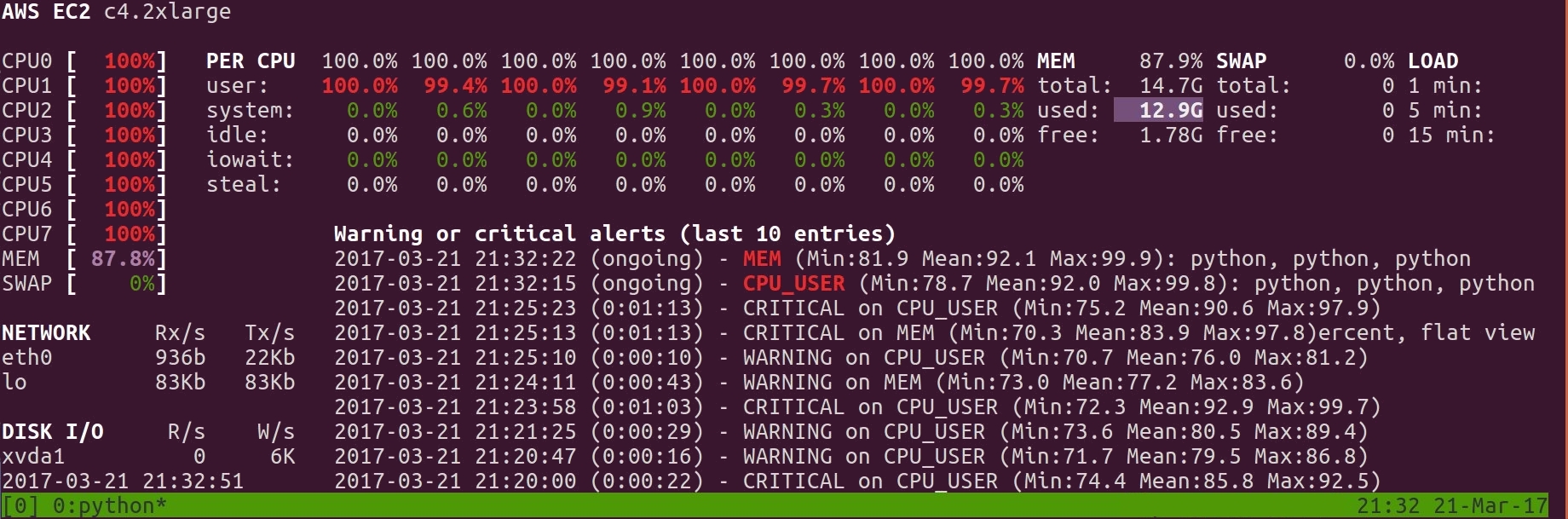

For S3 I/O on python, see my other post. In order to analyze the data efficiently, I used the python package multiprocessing to maximize CPU usage on an AWS compute instance. The result: Multi-CPU processing on a c4.2xlarge was 6 times faster than ordinary pre-processing on my local computer.

Setup

This will use the default python package multiprocessing. See the official documentation.

Multiprocessing

Doing basic Multi-CPU processing, or multiprocessing in general, is quite simple.

To use multiprocessing, you must first create a function that each process will run. Then, simply start all the processes.

Below, we initiate 2 processes that will run on 2 CPUs, each running function with arguments (arg1, arg2):

import multiprocessing

p1 = multiprocessing.Process(target=function, args=(arg1, arg2))

p2 = multiprocessing.Process(target=function, args=(arg1, arg2))

jobs = [p1, p2] #This allows you to access p1, p2 later

p1.start()

p2.start()

You can scale this up to your liking, or initiate them in a for loop.

To pre-process the Lung CT Images data, I divided the data into 12 sections and ran 12 processes on a c4.2xlarge, with 8 CPUs and 16GB of RAM. The reason I ran 12 processes on 8 CPUs is because roughly a third of the pre-processing time is used to download and upload data, which frees up the CPU for another process. This way, I ‘overload’ processes to ensure that the every CPU is being used at all times.

The result was that the multi-CPU pre-processing was 6 times faster than normal processing. However, if we use more powerful instances, such as the c4.8xlarge, which has 36 CPUs, we can cut down our processing time even more.

There are a lot more complex things you can do with the multiprocessing package, and we have just scratched the surface here. I found this to be a simple, yet powerful tool, whose usefulness will grow as the power of cloud computing increases.

Click here for the notebooks and module I used to preprocess the Kaggle Data Science Bowl Data.